A brief about CI/CD process

To understand the Jenkins tools first we should understand the CI/CD practice and why it’s required.

CI/CD is an integral part of any devops culture or practice followed across any project. In today’s IT industry we can’t imagine a project without a strong CI/CD practice.

In general in today’s industry if the operation part of the project is running without CI/CD or DevOps practices we can consider the project at risk. That’s the reason industry Leaders emphasize on implementation of the CI/CD practices across their projects.

Few of the Judging parameters which can be checked to test the maturity of devops in any projects are i) are we following CI/CD? ii) Are we following agile methodology? iii) Are we putting our infra as a code? Iv) Whether our artifacts are versioned or not?

So answer to all these question helps us to assess the maturity of our CI/CD devops practice under a project.

A healthy devops practice within a project is a good sign for quality delivery and sustainability of the project overall.

There are few terminology an individual should know to understand the CI/CD concepts these are

CI -> Continous Integration , CD -> Continous Delievery CD -> Continous Deployment

Continuous Integration (CI) helps to make our software release easier. It involves merging back of code back to main/master branch frequently the code is integrated to shared repository multiple times a day. A good continuous integration is always aligned and integrated with a robust test suites which executes automatically.

To brief the CI process once the developer check-ins the new code , it triggers the new build and automated test suits start running against the new build to check any integration problems so if in case the build or the test phase fails respective team is notified.

Now if we come to CD this term is used for both Continuous Delivery and Continuous Deployment.

Continuous delivery (CD) is process of automating entire software release process. So its means CI + automatically prepare the build and track its promotion/deployment to controlled environment. So a team member with appropriate authority can promote the build to controlled environments (UAT, PROD) with minimal manual intervention mostly with a single click.

Continuous Deployment (CD) is a practice under which deployment part is also automated and every change to the build is deployed automatically to the production environment without any manual intervention. So developer just reviews the pull request and merge back to the respective branch , from there onwards the CI/CD team takes over and make sure the builds run properly with test suites and if all gets completed successfully than the builds get deployed on the production automatically without manual intervention and the respective teams get informed.

Below picture depicts the continuous integration, continuous deployment and continuous Delivery Diagrammatically

What is Jenkins?

Sometime most of the people heard about Jenkins but they don’t know what exactly it is so their first question will be what is Jenkins exactly? So to define Jenkins in short it’s an open source tool and it’s one of the industry leader in CI/CD domain. It has the robust capabilities to automate the build, deployment and test automation part of projects of varied complexity and developed using varied technologies.

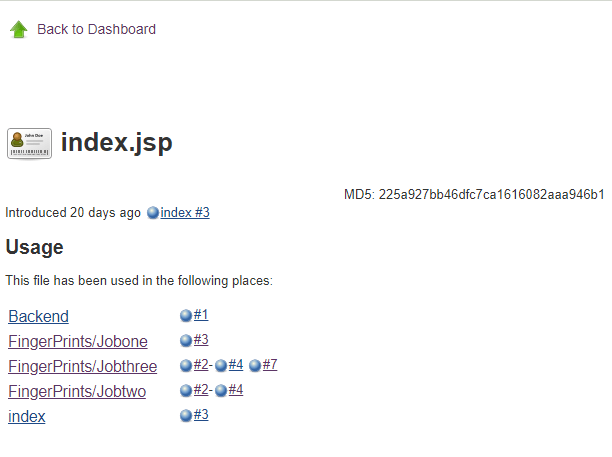

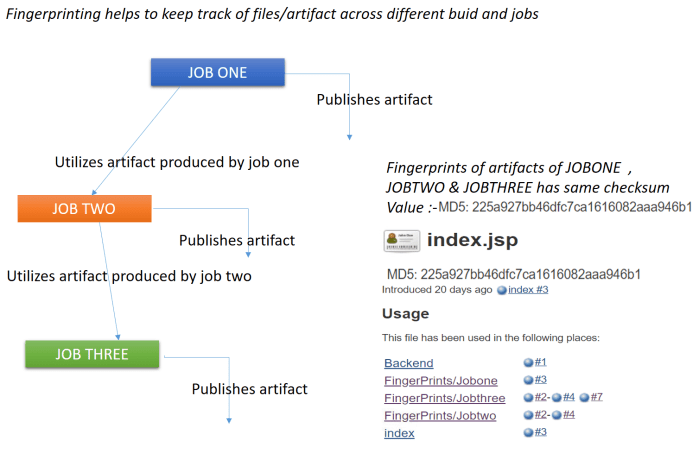

Whenever you hear this term in your project that team doesn’t know what build is working on which environment, there is no centralized system for the distribution of build artifacts like jar , ear or msi files.

So that is the right place we should inject the Jenkins tool which surely resolve these issues and bring CI/CD process to the project and makes the artifacts, builds and test automation more reliable and transparent.

Jenkins as devops engine

Jenkins is one of the core tool of devops tool chain, and including Jenkins as your CI/CD expert for your project is always a better decision to bring operation part of your project towards agile methodology and making it more transparent and visible.

In early era when there was no CI/CD practice followed it was very hard to identify , what got deployed , who deployed it , why the build got broken , what build number got deployed , detecting the versions of artifacts , getting notifications for build , deployment status.

After including the Jenkins as CI/CD expert in most project it’s observed all the above mentioned issues came to an end and now the development team can rely on their CI/CD expert Jenkins which make sure code and artifacts are getting deployed on time and they are notified accordingly with status with minimal manual effort and in a controlled manner.

Jenkins its alternative and its competitor

So as in every industry each good product has its competitor same as with Jenkins , apart from Jenkins there are other similar robust tools available in market which are doing good job and sharing the workload of Jenkins.

To name the few are Bamboo, Team city , Deploy IT , CircleCI , UrbanCode.

So all these tools are popular CI/CD tools which are used across industry to fulfill the CI/CD need of the project. Below is chart showing comparison between Jenkins , Teamcity and Bamboo so if see one the biggest benefit of Jenkins is it’s open source and free of cost .

Feature & Flexibility of Jenkins

Whenever we include any tool in a project we do an analysis on how much flexible that tool is , will that tool be able to sustain the changes happening across the projects , technology and how easily it can adapt to change.

So in this case also Jenkins scores a very good marks, below are the key bulleted point of Jenkins which makes it’s very much flexible and adaptable to the changes

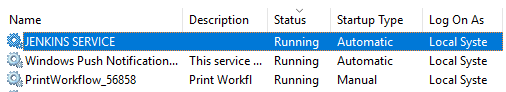

- Its OS independent and can run on Linux , windows or mac environment

- It has its own in build server so it doesn’t depends on any external application server to run itself

- It has integration capacity with various kind of tools and plugins spread across source code , build scripts , notification plugins

- Its provides around 300 free available plugins to cater different project needs from CI/CD perspective

Value add using Jenkins

- Its add agility to the project as team start following devops practice of continuous integration and deployment

- Build and release process become more controlled and changes can be tracked

- The operation part of the devops becomes more transparent and traceable

- Team can now rely on Jenkins which will take care of their build , deployment , test automation activities with minimal manual effort

Conclusion

So if your project still doesn’t follow CI/CD devops practice its high time they should be included into the project as a part of best practices which are followed across industry. Including Jenkins as tool will bring more agility, transparency and control to the project.