Optimizing Data Transfer with Amazon Database Migration Service (DMS)

In today’s dynamic digital landscape, businesses are continually seeking ways to optimize operations, reduce costs, and enhance agility. One of the most effective strategies to achieve these goals is by migrating data to the cloud. Amazon Database Migration Service (DMS) is an invaluable tool that simplifies the process of migrating databases to Amazon Web Services (AWS).

What is Amazon DMS?

Amazon DMS is a managed service that facilitates the migration of databases to AWS quickly and securely. It supports various database engines, including:

- Amazon Aurora

- PostgreSQL

- MySQL

- MariaDB

- Oracle

- SQL Server

- SAP ASE

- and more!

With Amazon DMS, businesses can migrate data while minimizing downtime, making it ideal for operations that require continuous availability.

Key Features of Amazon DMS

- Ease of Use: Amazon DMS is designed to be user-friendly, allowing you to start a new migration with just a few clicks in the AWS Management Console.

- Minimal Downtime: A key feature of Amazon DMS is its ability to keep the source database operational during the migration, ensuring minimal disruption to business activities.

- Support for Heterogeneous Migrations: Amazon DMS supports both homogeneous (same database engine) and heterogeneous (different database engines) migrations, providing flexibility to switch to the most suitable database engine.

- Continuous Data Replication: Amazon DMS enables continuous data replication from your source database to your target database, keeping them synchronized throughout the migration.

- Reliability and Scalability: Leveraging AWS’s robust infrastructure, Amazon DMS provides high availability and scalability to handle your data workload demands.

- Cost-Effective: With a pay-as-you-go pricing model, Amazon DMS offers a cost-effective solution, meaning you only pay for the resources used during the migration.

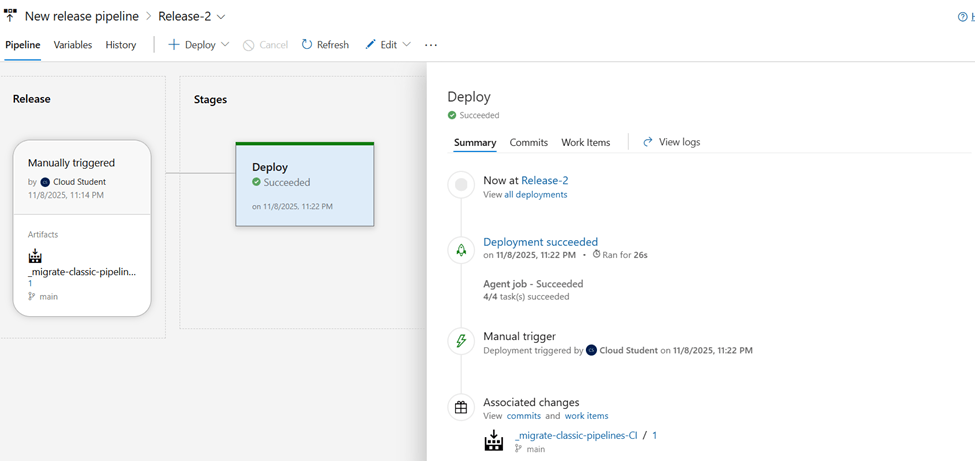

How Amazon DMS Works

Step 1: Setup the Source and Target Endpoints

The initial step in using Amazon DMS is to configure your source and target database endpoints. The source endpoint is the database you are migrating from, and the target endpoint is the database you are migrating to.

Step 2: Create a Replication Instance

Next, create a replication instance responsible for executing migration tasks and running the replication software.

Step 3: Configure Migration Tasks

Once the replication instance is set up, configure migration tasks that define the specific data to be migrated and the type of migration (full load, change data capture, or both).

Step 4: Start the Migration

With everything configured, start the migration process. Amazon DMS will migrate the data as specified in your migration tasks, ensuring minimal downtime and continuous data replication.

Step 5: Monitor and Optimize

Monitor the progress and performance of your tasks using the AWS Management Console. Amazon DMS provides detailed metrics and logs to help optimize the migration process.

Database Consolidation

Amazon DMS is perfect for database consolidation, simplifying the management and reducing costs by consolidating multiple databases into a single database engine. This process improves performance and optimizes resource utilization.

Benefits of Database Consolidation

- Simplified Management: Managing a single database engine is easier than handling multiple disparate systems.

- Cost Reduction: Consolidating databases can lead to significant cost savings by reducing licensing and maintenance expenses.

- Improved Performance: A consolidated database environment can optimize resource utilization and enhance overall performance.

Schema Conversion Tool (SCT)

The Schema Conversion Tool (SCT) complements Amazon DMS by simplifying the migration of database schemas. SCT automatically converts source database schemas to formats compatible with target database engines, including database objects like tables, indexes, and views, as well as application code like stored procedures and functions.

Key Features of SCT

- Automatic Conversion: SCT automates schema conversion, reducing the manual effort required.

- Assessment Reports: Detailed assessment reports highlight incompatibilities or conversion issues, enabling proactive resolution.

- Data Warehouse Support: SCT supports data warehouse conversions, allowing businesses to migrate large-scale analytical workloads to AWS.

Additional Database Services

AWS offers a variety of managed database services that complement Amazon DMS, providing a comprehensive suite of tools to meet diverse data needs.

Amazon DocumentDB

Amazon DocumentDB is a fully managed document database service designed for JSON-based workloads, compatible with MongoDB. It offers high availability, scalability, and security, making it ideal for modern applications.

Amazon Neptune

Amazon Neptune is a fully managed graph database service optimized for storing and querying highly connected data. It supports Property Graph and RDF models, making it suitable for social networking, recommendation engines, and fraud detection.

Amazon Quantum Ledger Database (QLDB)

Amazon QLDB is a fully managed ledger database providing a transparent, immutable, and cryptographically verifiable transaction log. It is perfect for applications requiring an authoritative transaction record, such as financial systems, supply chain management, and identity verification.

Managed Blockchain Database

AWS Managed Blockchain enables the creation and management of scalable blockchain networks, supporting frameworks like Hyperledger Fabric and Ethereum. It is ideal for building decentralized applications.

Amazon ElastiCache

Amazon ElastiCache is a fully managed in-memory data store and cache service supporting Redis and Memcached. It accelerates web application performance by reducing latency and increasing throughput, suitable for caching, session management, and real-time analytics.

Amazon DynamoDB Accelerator (DAX)

Amazon DynamoDB Accelerator (DAX) is a fully managed, in-memory cache for DynamoDB, providing fast read performance and reducing response times from milliseconds to microseconds. It is perfect for high read throughput and low-latency access use cases like gaming, media, and mobile applications.

Conclusion

Amazon Database Migration Service (DMS) is a versatile tool that simplifies database migration to the AWS cloud. Whether you’re consolidating databases, using the Schema Conversion Tool, or leveraging additional AWS database services like Amazon DocumentDB, Amazon Neptune, Amazon QLDB, Managed Blockchain, Amazon ElastiCache, or Amazon DAX, AWS offers a comprehensive suite of solutions to meet data needs.